AI feels exciting yet slightly dangerous for your business! Do you feel the same? Then you are in the right place. Most teams want smarter automation but worry about wrong predictions and compliance surprises. The truth is that AI can create real value only when it is built with guardrails.

This is where Responsible AI Governance becomes your safety net. It gives you clarity and control, so your models behave reliably even when data shifts or workloads grow.

Companies that follow governance frameworks report stronger customer trust and fewer operational risks.

In this guide, you will learn practical steps to govern AI with confidence and build systems that support your long-term growth.

What Is Responsible AI Governance?

Responsible AI governance means putting controls and accountability measures in place to ensure AI systems behave ethically, transparently and securely.

Simply put, Responsible AI Governance is the system of rules, checks and ongoing controls that help you build AI that is safe, fair, reliable and aligned with your business goals. Think of it as your AI’s guidebook. It keeps your models in line and stops problems before they grow into headlines.

Core Concepts You Should Know

1. Accountability: Who takes responsibility when AI goes wrong? If no one owns it, everyone suffers. A responsible AI governance framework ensures every model has a clear owner, reviewer and escalation path.

2. Transparency: People want to know why AI made a choice. Clear documentation helps teams trace decisions and improve trust.

3. Fairness: AI can unintentionally discriminate. Good governance prevents biased outcomes and protects your brand reputation.

4. Safety and Reliability: Your AI should behave as expected even when data shifts or volumes spike. Governance sets quality standards so your models stay stable over time.

Why It Matters?

Brands like Amazon and Apple faced criticism when their hiring or voice recognition systems behaved unfairly. If global giants can stumble, then there are more chances that startups and midsize businesses are even more vulnerable.

What Are AI Governance Frameworks?

Frameworks act as ready-to-use blueprints. They help you create policies, workflows and monitoring steps. Here are the ones trusted globally:

- NIST AI RMF

- OECD AI Principles

- EU AI Act compliance models

- ISO 42001 AI Management System

If you are building AI-driven platforms or enterprise applications, aligning with these frameworks helps you stay compliant. It also boosts customer trust because these are globally recognized standards.

AI Ethics vs AI Governance

This confuses many teams, so let’s clear it up.

AI Ethics deals with values and ideals, like fairness or inclusivity. It guides how you want AI to behave.

AI Governance turns those values into real rules, processes and measurable controls.

Key Differences: AI Ethics vs AI Governance

| Aspect | AI Ethics | AI Governance |

|---|---|---|

| Focus | Principles & values | Implementation & enforcement |

| Example | Fairness, non-discrimination | Process audits, compliance reporting |

| Scope | Broad societal impact | Organizational risk management |

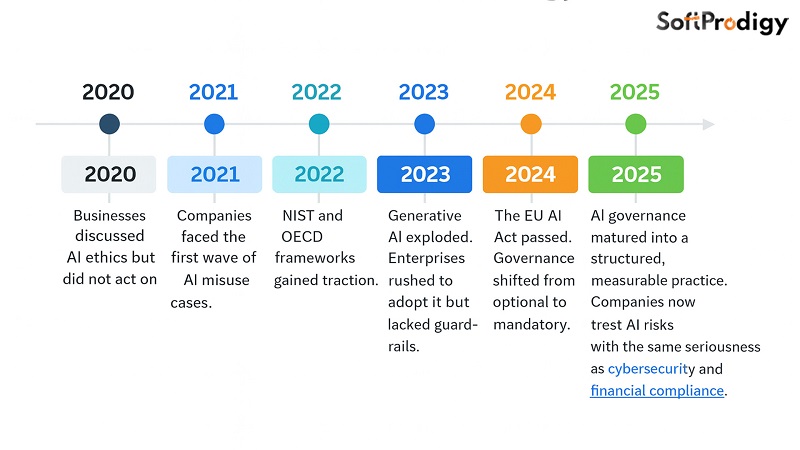

The Evolution of AI Governance (2020 to 2025)

AI governance did not start overnight. Here is the simple timeline:

- 2020: Businesses discussed AI ethics but did not act on it.

- 2021: Companies faced the first wave of AI misuse cases. Bias and misinformation issues created global concern.

- 2022: NIST and OECD frameworks gained traction. Responsibility became a business priority.

- 2023: Generative AI exploded. Enterprises rushed to adopt it but lacked guardrails.

- 2024: The EU AI Act passed. Governance shifted from optional to mandatory.

- 2025: AI governance matured into a structured, measurable practice. Companies now treat AI risks with the same seriousness as cybersecurity and financial compliance.

How Do You Measure AI Governance Effectiveness?

Here is where many businesses struggle. They set rules, but do not know whether the rules work. You can measure effectiveness with:

1. Incident Reduction Score: This metric helps you see if governance is actually preventing damage. Track the number of complaints, model failures, bias incidents or unexpected outputs before and after governance steps. If issues drop consistently, it means your guardrails work. If they increase, your system needs updated data checks or tighter monitoring.

2. Explainability Rate: This measures how many of your AI models can clearly show why they made a decision. If teams cannot explain a model’s output. It becomes risky for compliance and customer trust. A high explainability rate means your documentation, model design and oversight practices are strong enough to support audits, regulatory reviews and internal training needs.

3. Model Drift Detection Time: Drift happens when your AI’s performance changes because real world data shifts. The faster your team detects it, the safer your system stays. This metric shows how quickly you catch early warning signs. Shorter detection time means your monitoring tools and evaluation workflows are functioning well and preventing costly failures before they escalate.

4. Documentation Completeness Score: Great AI governance depends on how well your models are documented. This score reflects whether your data sources, model versions, training steps, evaluation reports and decision logs are fully recorded. Complete documentation protects you during audits, reduces confusion during handovers and helps teams understand exactly how and why the model behaves in certain ways.

5. Stakeholder Trust Score: Trust is a direct signal of governance health. Survey internal users, customers or cross functional teams and ask if they feel confident using your AI tools. Higher trust means your governance, transparency and risk controls are working. Lower scores show that people worry about reliability, fairness or clarity, which means your governance process needs improvement or better communication.

Why Responsible AI Governance Matters?

1. Legal and Regulatory Risks: AI has moved from an innovation advantage to a compliance requirement. In 2024, the EU AI Act triggered the biggest shift in the AI world. It requires companies to prove they follow responsible development. Penalties reach up to 35 million euros. Even if you operate outside Europe, global partners expect compliance. This means your AI must be transparent, safe and documented.

2. Reputational and Financial Consequences: Statista reports that 72 percent of consumers are more loyal to brands that use AI responsibly. On the other hand, companies that misuse AI face a double loss. They lose customer trust and suffer financial damage. One example that shook the industry was the biased credit scoring controversy involving a well known financial brand. The company spent months repairing its reputation. Governance could have prevented that.

3. Building Stakeholder Trust: Customers want clarity. So do investors, regulators and internal teams. When your AI governance is strong, people trust your decisions. They also trust your ability to scale without creating risks.

4. Competitive Advantage Through Responsible AI: A McKinsey study showed that companies with strong AI governance outperform competitors by up to 20 percent in efficiency and risk reduction. Responsible AI is not a burden. It is a power tool. It helps you innovate faster because you are not constantly fixing problems.

Which Global AI Regulations Should You Follow?

Do you want your AI to be trusted, compliant and scalable? Then you need to understand the global rules that shape how AI systems must operate. By 2025, regulators will have moved from suggestions to strict expectations.

Many leaders feel confused about what applies to them, so let us break it down in a simple, practical way. These standards are not here to slow you down. They exist to protect your business from penalties, customer loss and operational risks. However, when you align your systems with them, you gain a long term advantage.

1. NIST AI Risk Management Framework:

The NIST AI RMF has become the global reference for safe AI development. It guides you through the complete AI lifecycle and helps you identify risks early.

It is built on four action areas:

- Govern

- Map

- Measure

- Manage

If you work with sensitive data or operate in regulated industries, following NIST makes your AI decisions more defensible. Teams use it to run risk reviews, test fairness and document model behaviour.

2. OECD AI Principles

These principles focus on building AI that benefits people and society. They highlight fairness, transparency and accountability. Even if you are a small company, using OECD guidelines gives your AI a strong ethical base.

Many brands use these principles to train internal teams on responsible AI development. It helps everyone speak the same language and avoid conflicting decisions.

3. EU AI Act (2024 to 2025 Transition)

The EU AI Act is the first global law that defines how AI must behave. It affects any business that sells to or serves European customers.

It classifies AI systems into:

- Unacceptable risk

- High risk

- Limited risk

- Minimal risk

If your product uses AI for finance, hiring, healthcare, biometric identification or safety-critical functions, you fall under high risk. You will need clear documentation, oversight, testing reports and human supervision.

Penalties can reach up to 35 million euros. This is why companies worldwide are adopting EU AI Act principles even if they do not operate in Europe. It builds safety and protects brand reputation.

4. ISO 42001 AI Management System Standard

ISO 42001 is new and quickly gaining adoption. It teaches you how to build an end-to-end AI governance system inside your organization.

- Roles and responsibilities

- Policies

- Data controls

- Testing standards

- Monitoring and auditing

It works like ISO 27001 but for AI. If you want your business to look credible and enterprise-ready, aligning with ISO 42001 helps you stand out.

5. United States Executive Orders and Global Regulations

The US is pushing for advanced safety testing, transparency and reporting. Several government bodies now expect companies to show:

- Clear AI risk assessments

- Bias testing results

- Model documentation

- Incident reporting workflows

Countries like Canada, Japan, Singapore and the UK have also released governance guidelines. Even though these differ slightly, the core message is the same. Your AI must be safe, traceable and understandable.

Why This Matters For You

You do not need to follow every standard in full. You only need a structure that keeps your AI aligned with what regulators expect in 2025.

When you combine you get a strong, future proof foundation. Here is why this matters for your business:

- NIST for risk

- EU AI Act for compliance

- ISO 42001 for internal governance

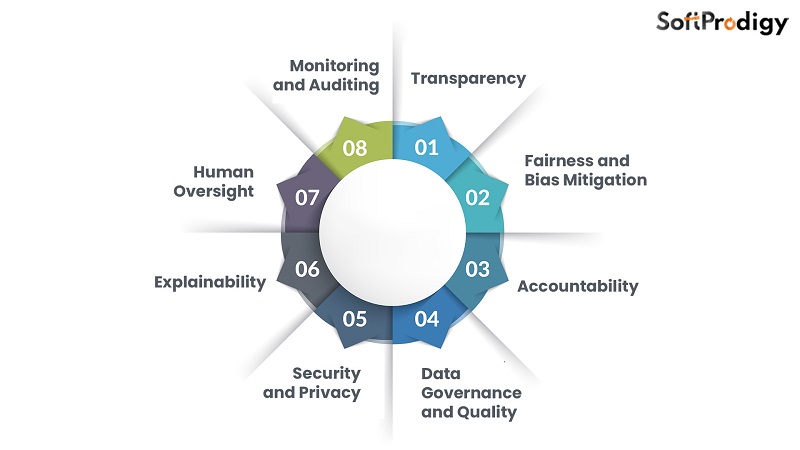

The 8 Pillars of Responsible AI Governance

Most teams do not fear AI. They fear the mistakes AI can make when no one is watching. I hear questions like, “What if the model misjudges a customer?”, “What if it hallucinates?”, or “What if the system becomes unpredictable after a few months?”

You are not overthinking. These risks are real, and they grow as your models scale. Let’s break down the most common failure modes and how you can stay protected.

1. Algorithmic Bias: Bias is one of the biggest concerns for product teams. It sneaks in quietly through incomplete or skewed data. A hiring model once used by a global tech company rejected women because historical data favored male candidates.

Your AI learns whatever pattern the data gives it. To fix this, run fairness tests, use balanced datasets and review high impact decisions manually. SoftProdigy helps teams set up bias detection and responsible data pipelines: https://softprodigy.com/services/

2. Data Quality Risks: AI performance depends on the quality of the data it consumes. Inconsistent, outdated or mislabelled data leads to inaccurate predictions. I have seen e-commerce companies lose revenue because their recommendation systems were trained on old seasonal data.

Good data governance makes a huge difference. Audit your sources, check freshness and track lineage. Clean data often improves accuracy faster than retraining a complex model.

3. Privacy and Security Threats: AI systems are becoming targets for cyber attacks. Threat actors try to steal models, corrupt training datasets or inject malicious inputs. A small alteration in the data can flip a model’s decision completely.

Businesses must secure AI models like they secure financial systems. Use encryption, access control, input filters and continuous monitoring.

4. Model Drift: Drift happens when the world changes but your model does not. Customer behavior, market trends and language patterns evolve fast. A model that performed well during training may fail six months later.

A retail company once saw a drop in sales because its pricing model failed to adapt to new buying patterns. To prevent this, monitor model outputs, set threshold alerts and schedule periodic revalidation.

5. Hallucinations and Incorrect Outputs: Generative AI can produce responses that look accurate but are completely incorrect. This is dangerous in customer support, legal work and finance. Many brands faced backlash because chatbots shared false or misleading information.

You can control this by grounding models with trusted data sources, setting guardrails and reviewing outputs for sensitive use cases. Always validate high-stakes decisions before they reach customers.

6. Black Box Decisions: AI becomes risky when no one can interpret its behavior. Black box outputs create confusion for teams, slow compliance reviews and frustrate users. Imagine denying a loan without being able to explain the reason.

That damages trust instantly. Add explainability tools, use transparent model types when possible and maintain documentation that outlines decision paths.

Why Addressing These Risks Matters for You?

Every risk you manage early saves you from a much bigger failure later. Companies that proactively monitor risks report smoother launches, fewer customer escalations and better stakeholder trust. With the right workflows, tools and governance, you can turn AI from a potential threat into a long-term advantage.

How Do You Actually Implement Responsible AI Governance?

Most businesses understand that AI governance is important, but the real struggle begins when they try to implement it. Teams ask questions like, “Where do we begin?”, “Do we need a big team?”, or “What tools do we set up first?”

Here is a step-by-step roadmap that works for startups, enterprises and growing digital platforms. Follow this sequence and your AI will stay stable, trusted and compliant as it scales.

Step 1: Build an AI Inventory and Risk Profile

You cannot govern what you cannot see. Start by listing every AI system you use.

This includes:

- Recommendation engines

- Chatbots

- Fraud detectors

- Scoring models

- Third-party AI tools

Create a simple sheet that captures purpose, data used, risks and who maintains the system. The World Economic Forum reports that 62 percent of failures occur because companies lack visibility into their own AI stack. Once you map everything, assign each system a risk level. High-risk systems require more testing and human checks.

Step 2: Set Up Governance Roles and Responsibilities

AI governance fails when everyone assumes someone else is in charge. Assign clear ownership early. Your governance group should include:

- A responsible model owner

- A reviewer for fairness and compliance

- A data quality manager

- A technical lead

- A business stakeholder

Even companies like Netflix and PayPal follow the same structure. It keeps decisions fast and avoids confusion when issues appear. Once ownership is set, document who approves models, who monitors them and who responds to incidents.

Step 3: Create Policies and Ethical Development Guidelines

Policies guide how your teams build and deploy AI. They should cover:

- Data collection rules

- Labeling standards

- Bias testing

- Security requirements

- Privacy protection

- Approval workflows

Clear policies reduce mistakes and give new team members a structured playbook. A Harvard Business Review survey found that teams with written AI policies are 45 percent less likely to encounter serious failures.

Step 4: Introduce Risk Assessments and Pre-Deployment Checks

Before launching any model, run a risk assessment.

Ask questions like:

- Could this output harm a customer?

- Is the data biased?

- Are we transparent about how decisions are made?

- Does a human review high-impact decisions?

A financial brand once avoided a major compliance issue because a pre-deployment check showed their credit scoring model was influenced by outdated income data. Catching problems early saves you from bigger failures later.

Step 5: Put Technical Controls and Guardrails in Place

Governance is not only paperwork. You need guardrails inside the model pipeline.

Set up:

- Bias testing tools

- Anomaly detection

- Model explainability tools

- Input and output filters

- Access control

- Robust documentation

These controls ensure your model behaves consistently in real-world conditions. Even companies like Google and Microsoft share that technical guardrails reduced their post-launch incidents significantly. Good controls protect both your customers and your team.

Step 6: Set Up Continuous Monitoring, Alerts and Audits

Models drift. Data shifts. Context changes. Monitoring helps you catch issues before users notice them.

Track:

- Accuracy

- Drift

- Decision distribution

- Response times

- User feedback patterns

Set alert thresholds so your team receives notifications when performance drops. Run audits every few weeks for high-risk models. This keeps your AI predictable and safe.

Step 7: Build a Culture of Continuous Improvement

Governance is not a one-time setup. Your models evolve and so should your guardrails.

Revisit policies. Update risk scores. Refresh datasets. Document major incidents and lessons.

Teams that follow a continuous improvement approach report 30 to 40 percent fewer operational issues, according to a Deloitte study. When governance becomes part of your culture, your AI stays sustainable in the long run.

What Tools and Metrics Do You Actually Need to Govern AI Effectively?

Most teams reach this point and ask the same question. “What tools should we use, and how do we know our governance is working?”

There are thousands of AI tools on the market. Testing all of them is impossible. So let’s simplify the noise. You need only a small set of tools to monitor risk, explain decisions and track performance. With the right stack, your AI stays predictable even under pressure.

1. Tools for Bias Detection: How Do You Ensure Fair Decisions?

Bias detection tools scan your model for unfair patterns. They compare outcomes across different demographic groups and highlight where unfairness may appear. A global banking brand reduced customer escalations by 32 percent after adding bias checks to its loan scoring system.

Look for tools that test:

- Demographic parity

- Equal opportunity

- Feature sensitivity

2. Model Monitoring Systems: How Do You Catch Problems Before Users Complain?

Monitoring tools track how your AI behaves in real time. They flag unusual patterns, sudden drops in accuracy or shifts in data. Retail brands use monitoring dashboards to track recommendation models so they do not show irrelevant products during peak sales.

Good monitoring tools track:

- Accuracy fluctuations

- Drift signals

- Outlier responses

- Distribution changes

These tools protect your system from silent failures that damage trust.

How Do You Prove Why a Model Made a Decision?

Users lose trust when they do not understand why AI said yes or no. Explainability tools help teams break down each decision into understandable pieces. They show which features influenced the output and by how much.

Healthcare companies rely on explainability to validate diagnostic models. If your AI influences real people, you need transparency. It also helps during audits by giving regulators clear reasoning behind each prediction.

Can You Track Where Your Data Came From?

Many AI failures happen because teams cannot trace how data was collected or processed. Data lineage tools help you track data sources, transformations, permissions and quality.

This is crucial when you deal with personal or sensitive data. A leading logistics brand avoided a major compliance fine because its lineage system proved that its training data followed privacy guidelines.

Do You Know Which Model Is Running Right Now?

A model registry keeps track of every version of your AI model. It stores training details, parameters, evaluation scores and deployment history. This prevents confusion when multiple teams work on the same model.

Imagine discovering an unexpected output only to realize an older version accidentally went live. Registries eliminate this chaos. They help your team roll back models safely and track improvements over time.

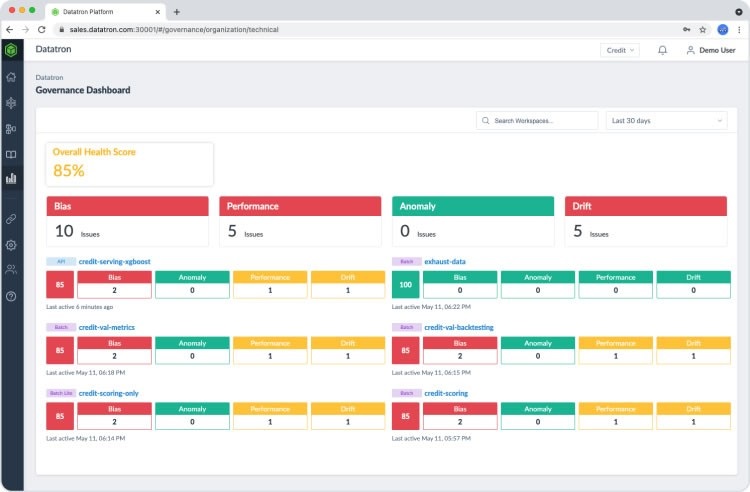

How Do You Keep Leadership and Teams Aligned?

Governance dashboards bring all your AI metrics into one place. They show:

- Model health

- Risks

- Audit status

- Fairness scores

- Compliance readiness

Leaders make better decisions when the picture is clear. A Deloitte study showed that teams with governance dashboards reduce decision bottlenecks by almost 38 percent because everyone sees the same information in real time.

Audit Logs and Reporting Tools

Audit logs keep a record of every action linked to your AI. They store changes, approvals, data updates and monitoring alerts. These logs protect you during regulatory checks or internal reviews.

When a European telecom company faced an AI-related investigation, their complete audit trail helped them demonstrate compliance within hours. Without logs, such reviews can take months.

Essential Metrics That Show Your Governance Is Working

Tools help you run your AI. Metrics help you measure if governance is effective. Focus on these:

- Drift detection time

- Bias-variance score

- Explainability coverage

- Incident reduction rate

- Documentation completeness

- Model approval turnaround time

These metrics show if your AI is becoming safer, more stable and more reliable. If these rise positively over time, your governance is doing its job.

How to Choose the Right Tools Without Getting Overwhelmed?

Do not pick tools because they are popular. Pick tools that match your risk level and use case.

Ask yourself:

- Does this tool solve a real problem for my model?

- Can my team use it easily?

- Does it integrate with my pipeline?

- Does it support compliance needs?

If you are unsure which tools your business actually needs, SoftProdigy can help you select, integrate and customize a governance stack that fits your industry and AI maturity level.

What Does the Future of AI Governance Look Like From 2025 to 2030?

Every leader adopting AI today has one big question in mind. “How will AI governance evolve in the next few years, and how do I stay ready for it?” The truth is that governance will only grow stronger. Regulations will tighten. Expectations from users will rise. And businesses that prepare early will have a clear advantage.

Let’s look at what is coming and how it will shape your AI roadmap.

1. Autonomous Governance Systems: Will AI Start Governing AI?

You will soon see AI systems monitoring other AI models. Early versions already exist. They scan for drift, detect anomalies and flag risky decisions faster than humans can.

Companies like Google and Meta have begun using automated oversight to check large-scale models. This trend will grow. It helps teams reduce manual work and respond to problems instantly.

Automated governance keeps your AI stable even during growth. It also reduces the workload on your teams, allowing them to focus on improvement instead of crisis control.

2. AI-Driven Compliance: Will Regulations Become Easier to Manage?

Regulatory environments are expanding fast. The EU AI Act is only the first wave. But here is the good news, AI tools will soon automate compliance tasks such as documentation, audit logging, and evidence collection.

Imagine completing compliance checks in hours instead of months.

Companies with strong governance structures will benefit the most from AI-driven compliance. They will adapt faster and avoid fines that slow down the competition.

3. Real Time Auditing: Will AI Review Itself Every Second?

Manual audits happen yearly or quarterly. AI audits will soon happen continuously. Retailers, fintech companies, healthcare providers and logistics platforms will use real-time auditing tools to catch errors the moment they appear.

This reduces the chance of customer impact. It also gives leaders an accurate view of AI performance at any moment.

4. Cross-Border Governance Standards: Will Countries Start Agreeing on One Framework?

Over the next five years, expect stronger alignment across countries. The goal is simple. Make AI safer without limiting innovation.

The United States, Singapore, Canada, India and the European Union are already discussing shared governance objectives. This means your AI systems will need to follow a more unified global standard.

Why does this matter?

You can build once and operate anywhere. Standardization reduces friction, expands your reach and simplifies compliance for your product teams.

5. Governance for Agentic AI: What Happens When AI Starts Taking Independent Actions?

Agentic AI systems can take actions without waiting for human input. They complete tasks, run workflows, and make decisions in dynamic environments. While powerful, they need strong oversight because they can move faster than manual review.

Future governance frameworks will focus on:

- Boundary setting

- Action monitoring

- Goal alignment

- Fail-safe triggers

If your business plans to use agentic AI, you will need stricter controls and clearer accountability. This is where early investment in governance gives you a head start.

6. Privacy Focused AI Design: Will Users Demand More Than Compliance?

By 2030, users will expect privacy by default. They want AI that protects their data, explains how it uses information and gives them control. Brands that adopt privacy first AI now will gain long-term trust.

Companies like Apple are already using privacy as a marketing advantage. Others will follow because customers value transparency more than ever.

7. Human in the Loop Becomes Human at the Center

Oversight will evolve from simply reviewing decisions to guiding AI behavior actively. Humans will remain the core decision makers. AI will support, not replace them.

Businesses that train teams in responsible AI usage will have safer deployments. They will also gain better results because human judgment adds nuance that AI cannot match.

8. Why Preparing Now Matters More Than Ever

By 2030, companies with strong governance will innovate faster because they will not waste time solving avoidable failures. A Deloitte study predicts that governance aligned organizations will reduce operational risks by up to 45 percent.

Your Responsible AI Governance Checklist for 2026

If you are unsure whether your AI is safe, compliant and trustworthy, use this quick checklist. It helps you confirm that your governance foundation is strong enough for real world use.

Tick each item honestly. The more checks you have, the stronger your AI readiness:

1. Data and Ethics:

- Data sources are verified, clean, and documented

- Sensitive data follows privacy rules

- Fairness checks are completed before deployment

- Training data reflects real world diversity

2. Risk and Compliance

- You have a risk rating for every AI system

- High risk models have human review steps

- EU AI Act and NIST guidelines are considered

- Compliance documentation is stored and accessible

3. Technical Controls

- Drift monitoring is active

- Bias detection tools are in place

- Explainability tools are integrated

- Version control and model registry are set up

4. Operations and Oversight

- Model owners are clearly assigned

- Governance roles are defined

- Third party AI tools are reviewed

- Performance audits happen regularly

5. User Trust and Safety

- Decisions are explainable to users

- Customer feedback loops are active

- High impact outputs have human supervision

- Incident response plans are ready

Wrapping Up

Responsible AI Governance is no longer something you adopt later. It is the foundation that keeps your systems stable, your customers confident and your business ready for the future. The companies that succeed with AI are not the ones that move the fastest. They are the ones that build responsibly, measure consistently and improve with intention.

If this guide helped you see where your strengths and gaps are, take the next small step. Strengthen one pillar, review one model and fix one risk. Momentum builds quickly when you start with clarity.

And if you ever need support with audits, governance setup or safe AI development, the team at SoftProdigy can help you move forward with confidence. Your AI has the potential to transform your business. With the right governance, it will.